Here are my Honours Projects for 2015. Projects are based around topics in computational creativity, agent-based modelling, evolutionary computing, computer graphics, virtual reality, interactivity, wearable electronics and devices, and digital music. Projects will take place within sensiLab - a new collaborative research studio based at Caulfield Campus. If you are interested in any of these areas please come and talk with me if you would like to know more.

IMPORTANT: Please come and see me regarding any project you intend doing before selecting that project.

4D Printing is a special kind of 3D printing, designed to fabricate physical 3D objects that can change and self-assemble after they are printed. One application is for printing objects with a large surface area within the limited print volume of current 3D printers. The aim of this project is to devise software that uses physics simulation and constraints to correctly "fold" large connected objects and surfaces so they can be printed. There will also be opportunities to experiment with self-assembling structures generated algorithmically. Access to 3D printing facilities will be provided so you can test and print your generated models.

URLs and bibliographic references to background reading

[1] Skylar Tibbits: The emergence of "4D printing" (TED Talk)

[2] Nervous System, Kinematics (Web site)

Pre- and co-requisite knowledge and units studied

Successful completion of FIT3088 Computer Graphics or equivalent.

UAVs (Unmanned autonomous Aerial Vehicle), commonly called 'Drones' are becoming popular devices for experimental robotics. Currently, drones normally require a human operator, and their level of "intelligence" or autonomy is very limited. Recent developments in low-cost computing and sensor technology means we can experiment with drones that have a far greater autonomy, such as the ability to find a person or location, avoid obstacles or "return to home" when batteries are low. Aim and Outline In this aerial robotics project you will build a UAV that will fly indoors. The aircraft is a helium balloon that uses an ultrasound sensor to detect altitude and thrust vectoring of propellors to maintain a constant altitude. The balloon navigates around an infra-red beacon using infra-red sensors mounted at the front, back, left and right. Turns are effected by differentially powering one or both of the blimp's two electric motors. The project is described here: http://diydrones.com/profiles/blog/show?id=705844%3ABlogPost%3A44817 One of the challenges of the project is to control the drone using the Raspberry Pi (a low-cost, credit card-sized computer on a single board). You will need to convert the Arduino design so that it works on the Pi. This presents a design challenge as the Drone can only lift a relatively small weight, so must be able to lift any sensors, computers, power, etc. Extensions to the project could involve researching an alternative indoor navigation technology, perhaps a wi-fi spatial location sensing system. When the UAV is working it can become a platform for a camera (and could, for example, recognise faces or other environmental features) or could be a surface onto which designs might be projected.

URLs and bibliographic references to background reading

[1] http://diydrones.com/profiles/blog/show?id=705844:BlogPost:44817

[2] Raspberry Pi (Web site)

Pre- and co-requisite knowledge and units studied

You will need skills in electronic assembly - soldering and breadboard prototyping, together with some knowlege of the Processing programming language. The project will be based in sensiLab at Caulfield Campus, where students will have access to all necessary hardware for the project.

Do you enjoy hacking electronics and programming small computers? In this project you will design and build a new kind of digital video camera, based around the Raspberry Pi computer. To goal is for the device to be portable, programmable (locally and on-line), self-powered and with many different capabilities not found in conventional cameras. This includes the ability to start recording when certain events occur, such as movement close to the camera. More advanced uses would include face detection, changes in environmental conditions, or at pre-set time periods. The idea would be to place the camera in a remote location, such as a wilderness area or jungle, and capture local animal movements, weather events, environmental changes, etc.

As part of the project you will need to work with a variety of sensors (proximity, light, etc.), camera modules, touchscreens and information displays, solar panels and batteries, connect these to the Pi and write the software to co-ordinate the camera's functionality. All the necessary hardware will be provided as part of the project.

URLs and bibliographic references to background reading

Check out the Raspberry Pi web site for the basics on how this device works.

Pre- and co-requisite knowledge and units studied

Some interest in electonics and making physical things would be helpful.

Digital technologies have radically changed the possibilities for design and architecture. This project will use generative and procedural techniques in computer graphics to generate two- and three-dimensional spatial patterns, and then apply them to problems in architecture and design.

For the project you will build a software application that can be used by architects and designers as a source of inspiration and as the basis for creating interesting new designs. Generative design is a powerful technique that uses a process or algorithm to build a design. By changing algorithm parameters, variations within a design space can be readily explored on a computer. We can even use techniques such as artificial evolution, to discover new designs that are difficult or impossible to discover manually. Completed designs can be made into physical objects via 3D printing technologies.

Spatial patterns can be generated using various modes of symmetry, based on parameterised processes of repetition and variation. Stochastic techniques, such as Perlin noise, can provide visually interesting variation, breaking the geometric formalism normally associated with computer designs. In addition to solving technical challenges in computer graphics and interaction, this project will also require you to research different cultural interpretations of spatial patterning, in particular understanding Japanese and Asian traditions of pattern making inspired by nature.

The project will be co-supervised with Tim Schork from the Department of Architecture and there will be opportunities to work in collaboration with architecture students as you develop the system.

URLs and bibliographic references to background reading

[1] Liotta, S-J A. and M. Belfiore, Patterns and Layering: Japanese Spatial Culture, Nature and Architecture, Gestalten, 2012

[2] Stevens, P.S., Handbook of Regular Patterns: An Introduction to Symmetry in Two Dimensions, MIT Press, 1980

[3] Ebert, D.S. et al., Texturing and Modeling: A Procedural Approach (3rd edition), Morgan Kaufmann, 2002

[4] Akenine-Moller, T. et al., Real-Time Rendering (3rd edition), A K Peters/CRC Press 2008

Pre- and co-requisite knowledge and units studied

Successful completion of FIT3088 Computer Graphics or equivalent. An interest in design or architecture would be an advantage.

Can a machine independently generate something that we would consider artistic or creative? This question goes back to the origins of computer science. Lady Lovelace famously declared in 1842 that the machine only has the ability to do what we tell it to do, it cannot "originate anything". Does creativity reside only in the programmer, not the program? There have been many famous models and programs that supposedly demonstrate machine creativity, such as Harold Cohen's AARON (an automated painter) and David Cope's EMI (a program that composes music). Early efforts in Artificial Intelligence seemed to forget about creativity, instead focusing on logical problem solving, but in more recent times understanding creativity has become an important focus in AI.

In this project you will investigate and devise computational models of creativity. The basis for investigations will be an agent-based model, where a population of creative agents try to produce artifacts that are novel, surprising and valuable. These artifacts will be judged by a separate group of critic agents. Both creative and critic agent have the ability to learn and evolve, so over time we should expect a co-evolutionary "arms race" as both creative and critic agents try to improve. By studying the model we hope to observe and understand creative phenomena, such as the origin of good ideas and study how they spread through a population.

URLs and bibliographic references to background reading

[1] McCormack, J. Pablo eCasso? In search of the first computer masterpiece, The Conversation 15 November 2012

[2] McCormack J. and M. d'Inverno, Computers and Creativity, Springer, Berlin 2012

[3] Boden, M., Creativity and Art: Three Roads to Surprise, Oxford UP, 2010

With many new technologies now available for experiencing virtual space, such as the CAVE 2 and Oculus Rift, attention is increasingly turning to how to interact and collaborate in a virtual environment. With a device such as the rift things are quite problematic because the user can't see their own body, making any interactive manipulation difficult. The aim of this project is to develop a prototype system that allows two or more people to remotely interact in a shared virtual world. Interaction would include things such as moving and manipulating virtual objects using simple gestures, navigation and movement, and voice recognition. Students working on this project will have access to the Oculus Rift (developer version) along with a variety of interactive devices.

URLs and bibliographic references to background reading

[1] Brenda Laurel, Computers as Theatre (2nd Edition), Addison-Wesley 2014.

Pre- and co-requisite knowledge and units studied

Good basic knowledge of computer graphics and an interest in interaction design.

Creative software in areas such as 3D animation or digital music synthesis can be difficult to operate, even for experienced users. People are often looking for highly specific results by adjusting tens or even hundreds of parameters (see this interface for example) to get the desired result. This usually requires thousands of hours of practice with the software to really understand not only how it works, but how to get the desired results. This approach has several problems: the user must learn a complex interface; there are too many parameters to control at once; it is difficult to judge the state of the system in the visual field; interpolation between different parameter sets is very difficult. Is there a better way?

The aim of this project is to investigate new forms of adaptive interfaces, where manipulation of one parameter changes many different parameters in a coherent way. To make things easier for the user we need to reduce a high-dimensional parameter space (10-100 control parameters) to a low-dimensional space (2-3 dimensions) that can easily be visualised and interacted with. The interface should be able to dynamically adapt based on feedback from the users of the system. If one user finds an interesting point in the parameter space this can be shared with others, over time leading to a map of the creative space of the system, somewhat similar to recommender systems found in on-line music or book stores, for example.

The challenge of this project is to develop an intuitive interface that exploits the graphics capabilities of modern GPUs.

URLs and bibliographic references to background reading

[1] Tenenbaum, J.B., V. de Silva and J.C. Langford, A Global Geometric Framework for Nonlinear Dimensionality Reduction, Science 290 (5500): 2319-2323, 22 December 2000

[2] Bencina, R., The Metasurface – Applying Natural Neighbour Interpolation to Two-to-Many Mapping, Proceedings of the 2005 International Conference on New Interfaces for Musical Expression (NIME05), Vancouver, Canada, 2005

Pre- and co-requisite knowledge and units studied

Successful completion of FIT3088 Computer Graphics or equivalent. A good understanding of relevant mathematics (linear algebra, calculus) would be advantageous.

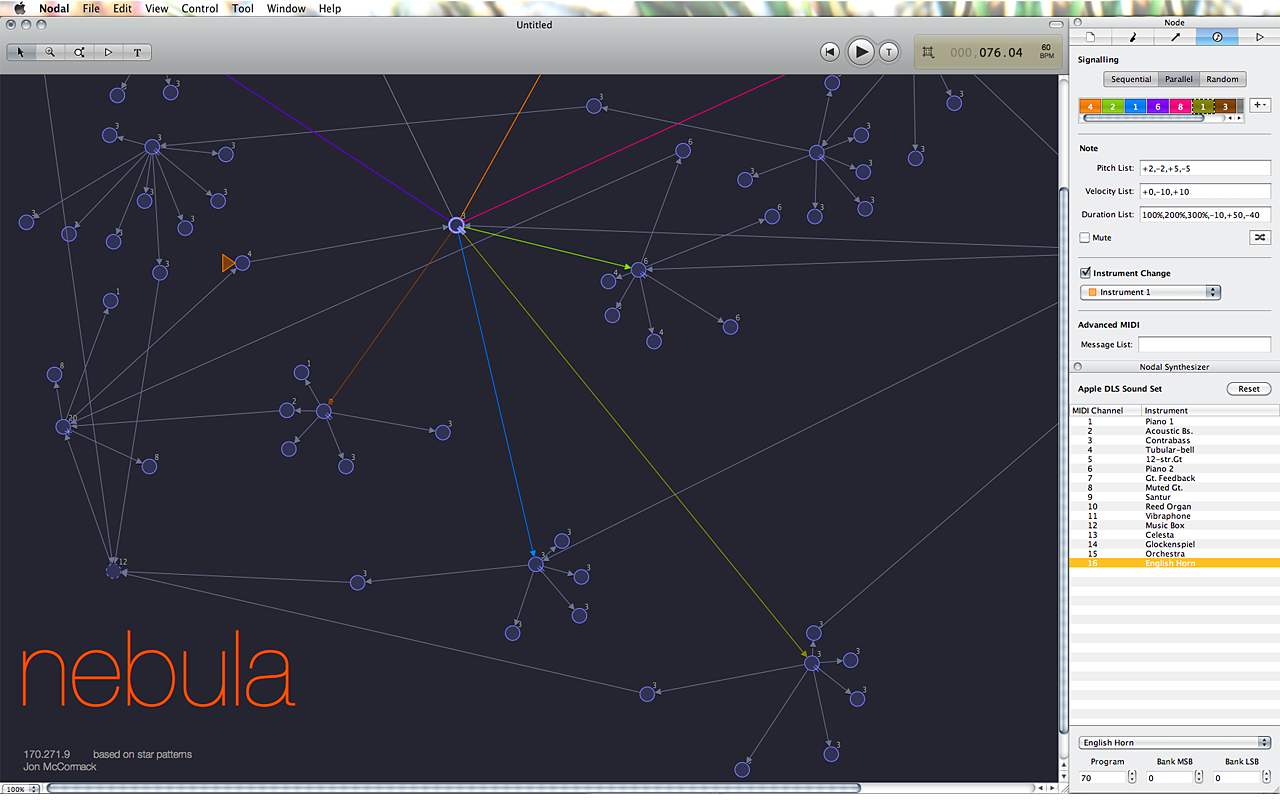

Nodal is a new type of software for composing music, developed at Monash and sold commercially. Nodal uses a user-created network of nodes (musical events) and edges (transitions between events) to create a generative musical system. A number of virtual players traverse the network, playing the notes they encounter in each node as they move around. Different players can start from different points on the network and the physical edge length determines the time between events (you can think of it as players traveling at a constant speed so the greater the edge distance from one node to the next, the longer the time between playing notes. You can find out more about Nodal (including a free trial download) here.

Nodal saves and loads networks in an XML format. The aim of this project is to read and analyse music recorded in sequential notation (e.g. encoded as a standard MIDI file) and try to intelligently convert it into a Nodal network. The trick, of course, is in the "intelligently" bit. The method you develop must be able to look for repeated sequences, create efficient network structures and make musically meaningful distinctions between different parts of the sequential input (WARNING: this is not a trivial problem). So this project is somewhat related to information compression, with the additional constraint of retaining the musical and performance features of the source material.

URLs and bibliographic references to background reading

[1] J. McCormack and P. McIlwain," Generative Composition with Nodal", in E. R. Miranda (ed): A-Life for Music: Music and Computer Models of Living Systems, A-R Editions, Inc. Middleton, Wisconsin, 2011, pp. 99-113

Pre- and co-requisite knowledge and units studied

Some basic musical knowledge or background in music (yes - playing the guitar is ok!) will be necessary to complete this project.

Umbilical is a new platform for active crowd participation using mobile devices, wearables, and Internet of Things technologies. There are several different projects here related to the Umbilical and uNet platforms, which have been developed by a US-based team. In these projects you will work with the team to explore the potential of this exciting new technology.

uNet Projects

Rebalance uNet tree

As the set of nodes connected in a uNet network ebb and flow there are inevitable situations where a large section of nodes must find a new connection to the larger network. In normal situations the network grows organically which naturally results in a balanced tree – overloaded nodes do not respond to the available node request thus the network self-balances. However, when a higher node suddenly drops out there will be a flood of reconnection requests which can result in both an unbalanced node structure and a slow-down of processing as all the reconnection requests are processed.

This task is to evaluate different rebalancing algorithms to determine which are best suited to uNet. Selected algorithms can include questions of:

References:

What is a balanced tree: http://webdocs.cs.ualberta.ca/~holte/T26/balanced-trees.html

AVL tree rebalancing: http://en.wikipedia.org/wiki/AVL_tree

Binary tree rebalancing: http://www.stoimen.com/blog/2012/07/03/computer-algorithms-balancing-a-binary-search-tree/

uNet implementations on Linux/Windows/OSX

The uNet platform would benefit from having native implementations on non-mobile platforms (Linux, Windows and OSX). This could take the form of a simple application (chat, for example), delayed delivery, a driver (for the adventurous), a port of our iOS client to Windows or something altogether different.

uNet testing suite

Full testing suite that builds a complex node structure, sends packets around, interrogates the routing tables, add nodes, remove nodes in the middle/leaf, etc. Suite could include metrics for the number of nodes that a packet traverses to get from source to destination, size of routing tables, average/max queries on routing tables to find next-hop information for a packet, alternative models for storage of the routing table to save space, etc.

Packet structure evaluation

The initial packet structure for uNet needs to be evaluated for performance and alternatives. Tasks in this area include:

Three-level security

The uNet security model is dependent upon a three-level security chain. This differs from the typical PKI situation in that there is a third certificate used in the construction of the trust. Tasks include:

Hardware implementations

Umbilical

Littlebits

Framework

Umbilical modularity

Pre- and co-requisite knowledge and units studied

iOS and/or Android development experience.

| Past Projects: | Honours Projects 2014 |